Meta’s own research: Instagram safety systems fail to shield teens from harmful content

October 20, 2025

Meta’s own research showed that the company’s existing safety systems failed to detect 98.5% of potentially inappropriate content for teens.

Internal research conducted by Meta has found that teenagers who frequently reported feeling bad about their bodies after using Instagram were exposed to nearly three times more “eating disorder adjacent” content than their peers, according to documents exclusively reviewed by Reuters.

The study, which surveyed 1,149 teens during the 2023-2024 academic year, revealed that vulnerable adolescents saw body-focused content comprising 10.5% of their Instagram feeds, compared to just 3.3% for other teenagers.

Among the 223 teens who often felt bad about their bodies after viewing Instagram, researchers found they encountered significantly more posts featuring “prominent display” of body parts, “explicit judgment” about body types, and content related to disordered eating and negative body image.

Safety systems fail to detect harmful content

Perhaps most concerning, Meta’s own research showed that the company’s existing safety systems failed to detect 98.5% of potentially inappropriate content for teens. The researchers noted this finding was “not necessarily surprising” since Meta had only recently begun developing algorithms to identify such harmful material.

The vulnerable teens in the study also encountered more provocative content overall, with 27% of their feeds containing material Meta classifies as “mature themes,” “risky behavior,” “harm & cruelty” and “suffering,” compared to 13.6% among other teens.

Jenny Radesky, Associate Professor of Pediatrics at the University of Michigan who reviewed the unpublished research, told Reuters that the study’s methodology was robust and its findings disturbing.

“This supports the idea that teens with psychological vulnerabilities are being profiled by Instagram and fed more harmful content,” Radesky told Reuters. “We know that a lot of what people consume on social media comes from the feed, not from search.”

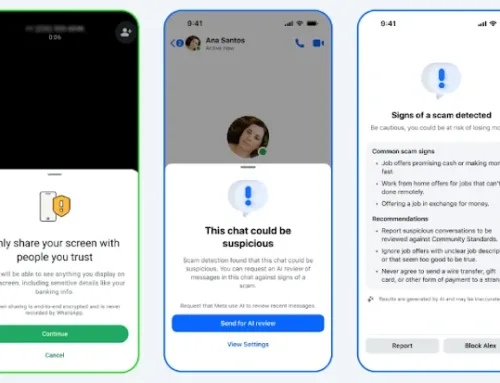

Meta introduces PG-13 content standards

The revelation comes as Meta announced new safety measures earlier this month, implementing PG-13 content standards for all teen accounts on Instagram. Starting this week, teenagers will automatically be restricted to viewing content that aligns with movie industry PG-13 ratings, with only parents able to loosen these settings.

Under the new guidelines, teens will be blocked from seeing posts containing strong language, dangerous stunts, or content that could promote risky behaviour like marijuana use. The platform will also prevent teens from following accounts that frequently share age-inappropriate content.

Meta spokesperson Andy Stone said the company has reduced age-restricted content shown to teenage Instagram users by half since July 2025. “This research is further proof we remain committed to understanding young people’s experiences and using those insights to build safer, more supportive platforms for teens,” Stone stated.

The social media giant faces ongoing federal and state investigations over Instagram’s effects on children, as well as civil lawsuits from school districts alleging harmful product design and deceptive marketing. The internal study, marked “Do not distribute internally or externally without permission,” adds to a growing body of evidence about the platform’s impact on adolescent mental health.

Search

RECENT PRESS RELEASES

Related Post