Cracking Down on Spammy Content on Facebook

April 24, 2025

We’ve heard you. Facebook Feed doesn’t always serve up fresh, engaging posts that you consistently enjoy. We’re working on it. We’re making a number of changes this year to improve Feed, help creators break through and give people more control over how content is personalized to them.

We recently introduced a new Friends tab in the US to help bring back the “OG” Facebook experience. Now we’re launching an initiative to further crack down on spammy content. Some accounts try to game the Facebook algorithm to increase views, reach a higher follower count faster or gain unfair monetization advantages. While the intentions are not always malicious, the result is spammy content in Feed that crowds out authentic creator content.

Moving forward, we’re taking a number of steps to reduce this spammy content and help authentic creators reach an audience and grow.

Lowering the Reach of Accounts Sharing Spammy Content

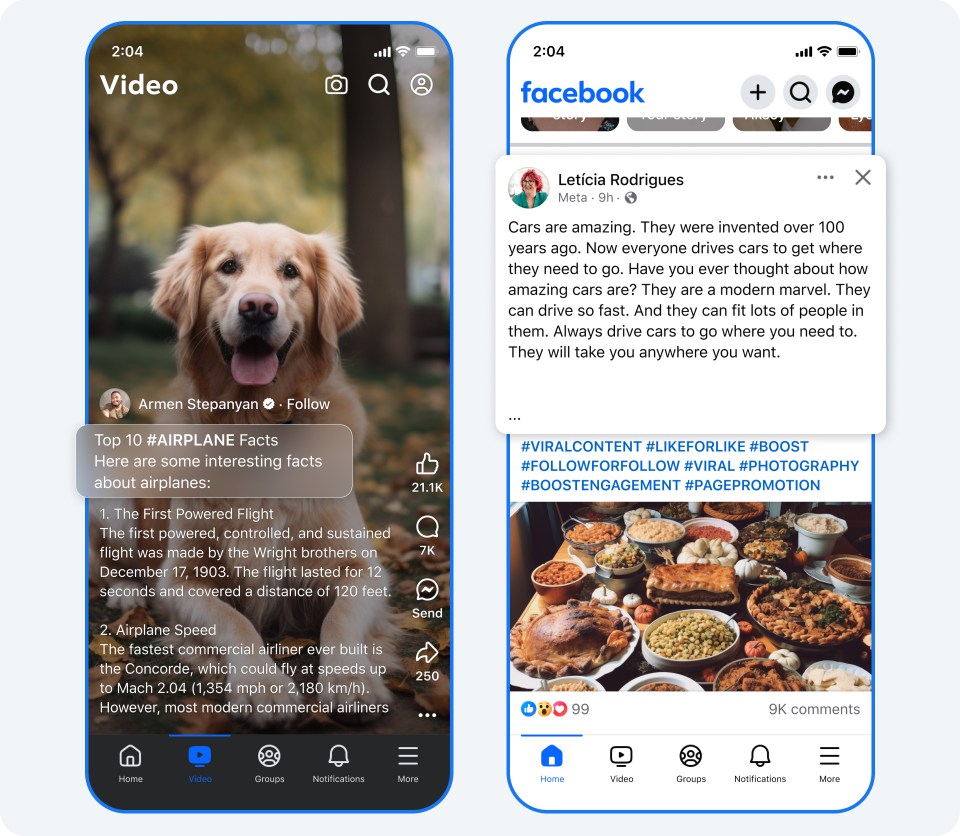

- Some accounts post content with long, distracting captions, often with an inordinate amount of hashtags. While others include captions that are completely unrelated to the content – think a picture of a cute dog with a caption about airplane facts. Accounts that engage in these tactics will only have their content shown to their followers and will not be eligible for monetization.

- Spam networks often create hundreds of accounts to share the same spammy content that clutters people’s Feed. Accounts we find engaging in this behavior will not be eligible for monetization and may see lower audience reach.

Investing More to Remove Accounts That Coordinate Fake Engagement and Impersonate Others

- Spam networks that coordinate fake engagement are an unfortunate reality for all social apps. We’re going to take more aggressive steps on Facebook to prevent this behavior. For example, comments that we detect are coordinated fake engagement will be seen less. We also continue to monitor and remove fake pages that exist to inflate reach – in 2024 we took down more than 100 Million fake Pages engaging in scripted follows abuse on Facebook. Along with these efforts, we’re also exploring ways to elevate more meaningful and engaging discussions. For example, we’re testing a comments feature so people can signal ones that are irrelevant or don’t fit the spirit of the conversation.

- Creators are often targets of other accounts pretending to be them. In 2024, we took down over 23 million profiles that were impersonating large content producers. While we’ve made a lot of progress, there’s more work to do. In addition to the proactive detection and enforcements we have in place to identify and remove imposters, we’ve added features to Moderation Assist, Facebook’s comment management tool, to detect and auto-hide comments from people potentially using a fake identity. Creators will also be able to report impersonators in the comments.

Continuing to Protect and Elevate Creators Sharing Original Content

The content that creators share is an expression of themselves. When other accounts reuse their content without their permission, it takes unfair advantage of their hard work. We continue to enhance Rights Manager to help creators protect their original content. We’re also providing guidance to help creators making original, engaging content succeed on Facebook. Learn more here.

Meta’s platforms are built to be places where people can express themselves freely. Spammy content can get in the way of one’s ability to ultimately have their voices heard, regardless of one’s viewpoint, which is why we’re targeting the behavior that’s gaming distribution and monetization. We want the creator community to know that we’re committed to rewarding creators who create and share engaging content on Facebook. This is just one of the many investments to ensure creators can succeed on Facebook.

Search

RECENT PRESS RELEASES

Related Post