Introducing Vulcan: Amazon’s first robot with a sense of touch

May 7, 2025

Introducing Vulcan: Amazon’s first robot with a sense of touch

Built on advances in robotics, engineering, and physical AI, Vulcan is making our workers’ jobs easier and safer while moving orders more efficiently.

The next time you drop a coin on the ground, consider how you go about picking it up again. Maybe your hearing tells you which way it bounced. Your vision lets you zero in on its location. And for the final, crucial act of getting it from the ground to your hand, you rely on your sense of touch to know exactly when to clasp your fingers together and how to flip it into your palm or your pocket.

But what many humans do so easily, few robots can tackle. For all their accomplishments—defeating chess masters, driving around city streets, pulling entire kennels’ worth of dog hair out of carpets—most robots are unfeeling, and not just in the emotional sense.

The typical robot is “numb and dumb,” says Aaron Parness, Amazon director, applied science, especially those that work in commercial settings. “In the past, when industrial robots have unexpected contact, they either emergency stop or smash through that contact. They often don’t even know they have hit something because they cannot sense it.”

Vulcan’s ability to pick and stow items makes our associates’ jobs easier—and our operations more efficient.

Vulcan’s ability to pick and stow items makes our associates’ jobs easier—and our operations more efficient.Today at our Delivering the Future event in Dortmund, Germany, we’re introducing a robot that is neither numb nor dumb. Built on key advances in robotics, engineering, and physical AI, Vulcan is our first robot with a sense of touch.

“Vulcan represents a fundamental leap forward in robotics,” Parness says. “It’s not just seeing the world, it’s feeling it, enabling capabilities that were impossible for Amazon robots until now.”

And it’s already changing the way we operate our fulfillment centers, helping make our employees’ jobs safer and easier while moving customers’ orders more efficiently.

“Working alongside Vulcan, we can pick and stow with greater ease,” says Kari Freitas Hardy, a front-line employee at GEG1, a fulfillment center in Spokane, Washington. “It’s great to see how many of my co-workers have gained new job skills and taken on more technical roles, like I did, once they started working closer with the technology at our sites.”

The power of touch

Vulcan is not our first robot that can pick things up. Our Sparrow, Cardinal, and Robin systems use computer vision and suction cups to move individual products or packages packed by human workers. Proteus, Titan, and Hercules lift and haul carts of goods around our fulfillment centers.

But with its sense of touch—its ability to understand when and how it makes contact with an object—Vulcan unlocks new ways to improve our operations jobs and facilities.

In our fulfillment centers, we maximize efficiency by storing inventory in fabric-covered pods that are divided into compartments about a foot square, each of which holds up to 10 items on average. Fitting an item into or plucking one out of this crowded space has historically been challenging for robots that lack the natural dexterity of humans.

Vulcan is our first robot with a similar kind of finesse. Vulcan can easily manipulate objects within those compartments to make room for whatever it’s stowing, because it knows when it makes contact and how much force it’s applying and can stop short of doing any damage.

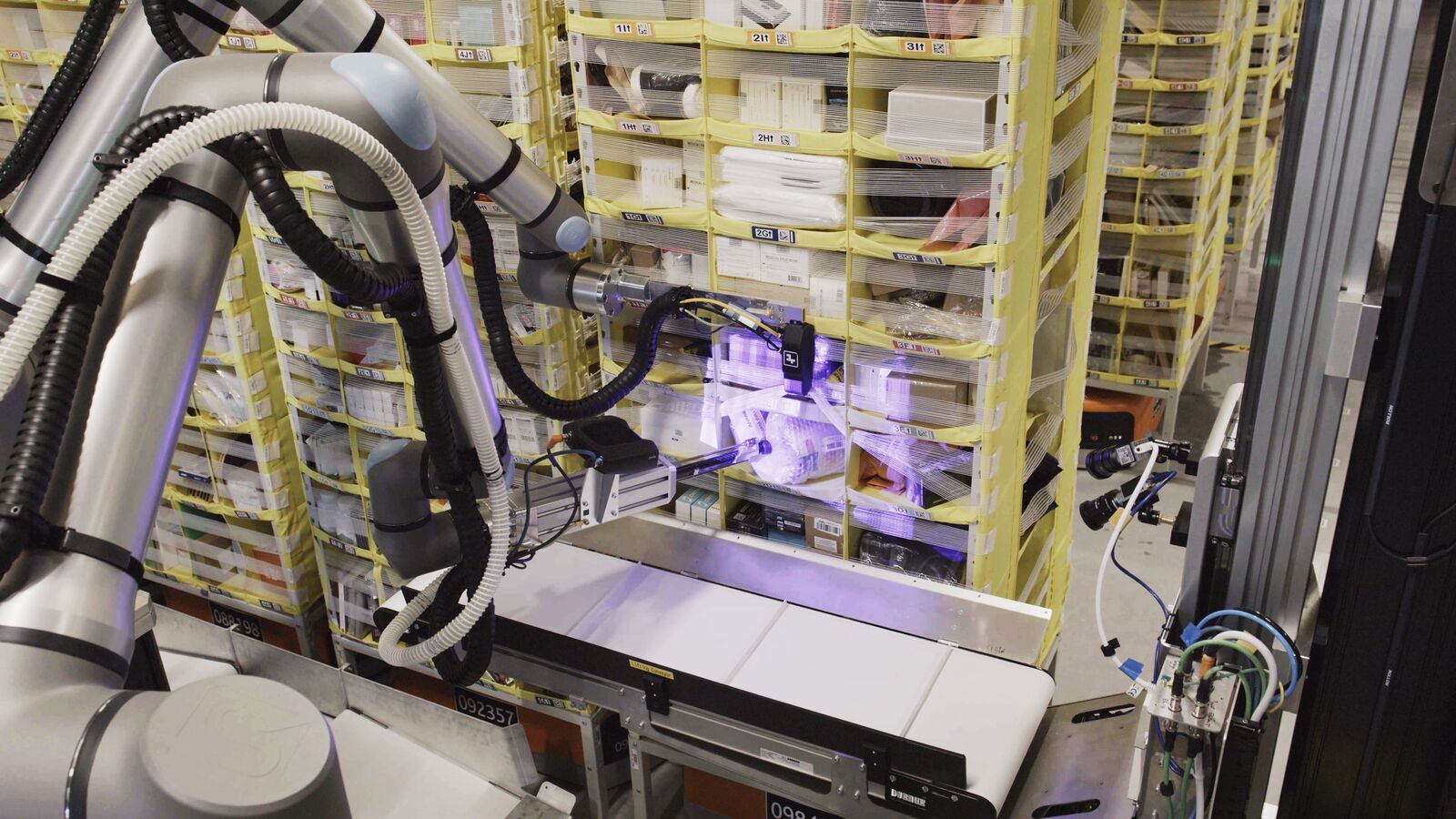

Vulcan does this using an “end of arm tooling” that resembles a ruler stuck onto a hair straightener, plus force feedback sensors that tell it how hard it’s pushing or how firmly it’s holding something, so it can stay below the point at which it risks doing damage.

The ruler bit pushes around the items already in those compartments to make room for whatever it wants to add. The arms of the hair straightener (the “paddles”) hold the item to be added, adjusting their grip strength based on the item’s size and shape, then use built-in conveyor belts to zhoop the item into the bin.

For picking items from those bins, Vulcan uses an arm that carries a camera and a suction cup. The camera looks at the compartment and picks out the item to be grabbed, along with the best spot to hold it by. While the suction cup grabs it, the camera watches to make sure it took the right thing and only the right thing, avoiding what our engineers call the risk of “co-extracting non-target items.”

Vulcan uses an arm that carries a camera and a suction cup to pick items from our storage pods.

Vulcan uses an arm that carries a camera and a suction cup to pick items from our storage pods.With the ability to pick and stow approximately 75% of all various types of items we store at our fulfillment centers, and at speeds comparable to that our front-line employees, Vulcan represents a step change in how automation and AI can assist our employees in their everyday tasks. It also has the smarts to identify when it can’t move a specific item, and can ask a human partner to tag in, helping us leveraging the best of what our technology and employees can achieve by working together.

The human-robot connection

We did all this work to improve not just efficiency, but worker safety and ergonomics. At our fulfillment centers in Spokane, Washington, and Hamburg, Germany, Vulcan is focused on picking and stowing inventory in the top rows of those inventory pods. Because those rows are about 8 feet up, they typically require an employee to reach them using a step ladder, a process that’s time-consuming, tiring, and one that is less ergonomic than stowing and picking at their midriff. Vulcan also handles items stowed just above the floor, so our employees can work where they’re most comfortable.

“Vulcan works alongside our employees, and the combination is better than either on their own,” says Parness.

Vulcan will let our associates spend less time on step ladders and more time working in their power zone.

Vulcan will let our associates spend less time on step ladders and more time working in their power zone.This application of Vulcan’s capabilities is just the latest example of how we think about and use this kind of technology. Over the past dozen years, we’ve deployed more than 750,000 robots into our fulfillment centers, all of them designed to help our employees work safely and efficiently by taking on physically taxing parts of the fulfillment process.

Meanwhile, these robots—which play a role in completing 75% of customer orders—have created hundreds of new categories of jobs at Amazon, from robotic floor monitors to on-site reliability maintenance engineers. We also offer training programs like Career Choice, which help our employees move into robotics and other high-tech fields.

Picking problems

Vulcan’s technology isn’t handy by happenstance. Just as it fits in with our approach to robotics, it’s one more example of how we innovate: We pick out important problems and find or develop solutions—we don’t create interesting tech and then look for ways to use it.

Vulcan started with our realization that every time one of our employees has to use a ladder to reach the upper parts of our storage pods, they’re spending time on a less ergonomic and less efficient task. Adding a robot to this process required years of work on all sorts of tech, from force feedback sensors and a “hand” that can carefully handle millions of unique items, to a tool for nudging all kinds of boxes and baggies of all shapes and sizes, to a stereo vision system to estimate where there’s available space in a bin.

Vulcan represents “a technology that three years ago seemed impossible but is now set to help transform our operations,” says Aaron Parness, Amazon’s director of robotics AI.

Vulcan represents “a technology that three years ago seemed impossible but is now set to help transform our operations,” says Aaron Parness, Amazon’s director of robotics AI.It also required the novel application of physical AI, including algorithms for identifying which items Vulcan can or can’t handle, finding space within bins, identifying tubes of toothpaste and boxes of paper clips, and much more. And we couldn’t just teach Vulcan with computer simulations, but trained its AI on physical data that incorporates touch and force feedback. It tackled thousands of real-world examples, from picking up socks to moving fragile electronics.

Vulcan even learns from its own failures, figuring out how different objects behave when touched and steadily building up an understanding of the physical world, just like kids do. So, you can expect it to become smarter and more capable in the years to come.

The result, Parness says, is “a technology that three years ago seemed impossible but is now set to help transform our operations.”

Touchdown

That transformation is on its way not just because Vulcan’s so capable, but because we implement our best work at Amazon scale. We plan to deploy Vulcan systems over the next couple of years, at sites throughout Europe and the United States.

“Our vision is to scale this technology across our network, enhancing operational efficiency, improving workplace safety, and supporting our employees by reducing physically demanding tasks,” Parness says.

Better operational efficiency translates to getting the right product to the right truck at ever faster speeds, allowing us to continue widening our selection and offer industry-leading prices.

And all it took was teaching a robot to feel.

Search

RECENT PRESS RELEASES

Related Post