Meta faces MPA backlash over Instagram PG-13 label

November 15, 2025

Meta’s teen safety update hits a legal snag as the Motion Picture Association objects to the use of its film rating

Meta is facing a legal challenge from the Motion Picture Association (MPA) after using the term “PG-13” to describe Instagram’s new content filtering system for teen users. The association issued a cease-and-desist letter objecting to what it called “highly misleading” marketing and the unauthorized use of a term central to the film industry’s ratings system.

At first glance, this might seem like a Hollywood vs. tech turf war. But for marketers, it’s a deeper signal that industry-standard labels, especially those used to signify content safety, can carry unintended consequences when repurposed. With regulators, parents, and platforms all watching closely, brand messaging around youth safety is now a legal and reputational risk zone.

This article breaks down the clash between Meta and the MPA, unpacks the implications for content and brand safety on social platforms, and shares tips on how marketers can navigate rising scrutiny around teen-focused digital experiences.

Here is a table of content for quick access:

What happened?

According to NBC News, the MPA issued a cease-and-desist letter to Meta dated October 28, objecting to its use of “PG-13” in a recent Instagram update that restructured default content settings for teen users. The association claims the label, which it owns as a certified trademark, was misused in Meta’s public materials and could undermine trust in the film industry’s official ratings.

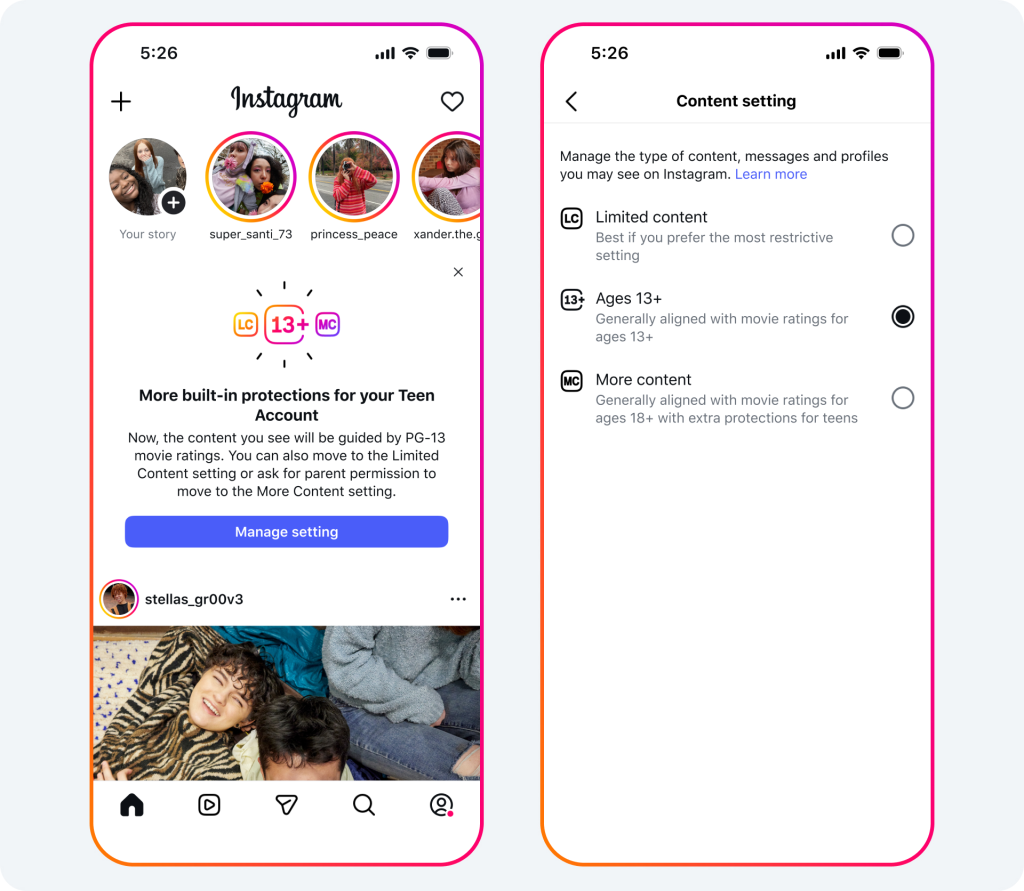

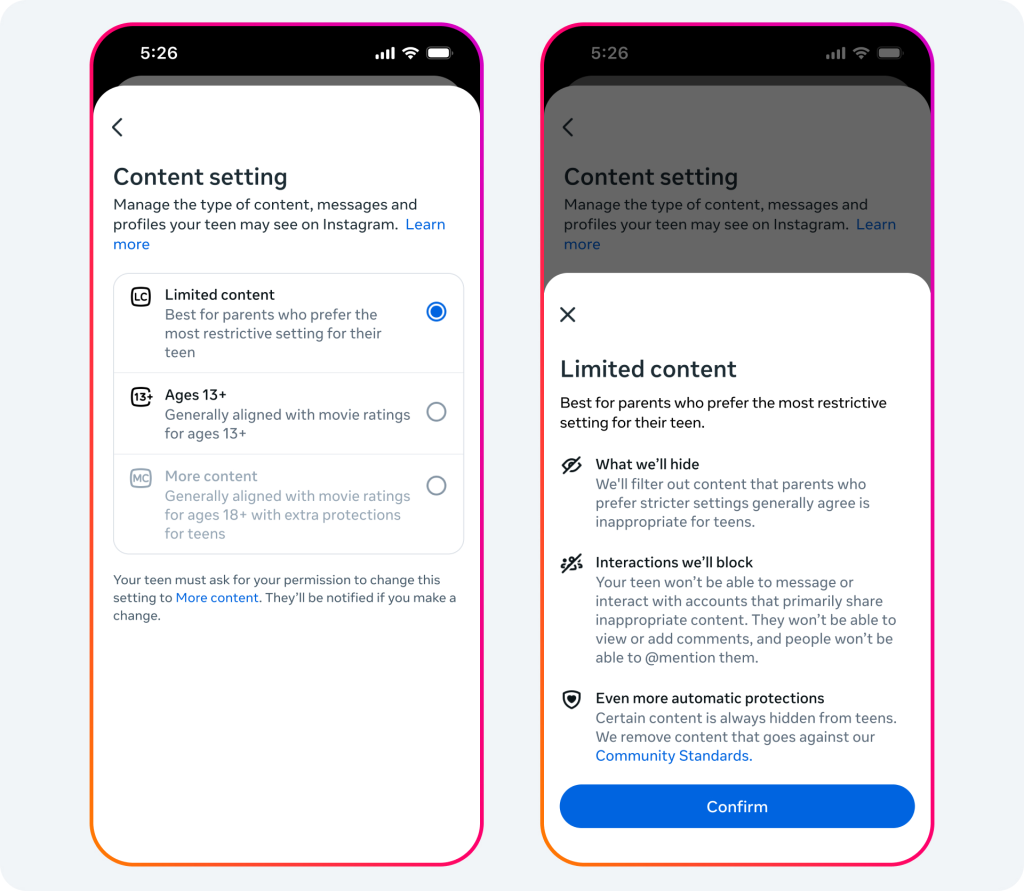

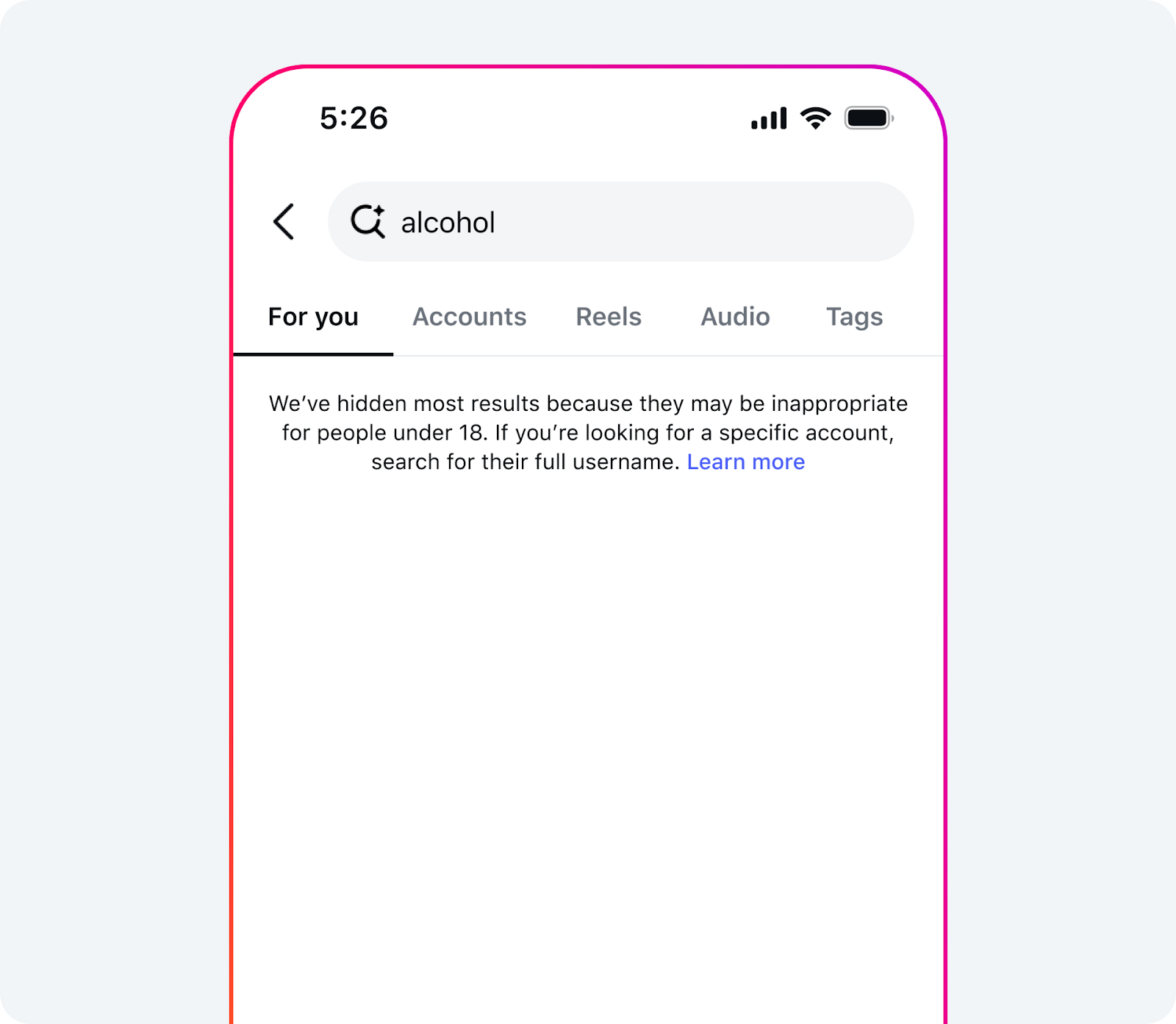

The controversy stems from Meta’s October blog post, where it said the revamped Teen Accounts feature was “guided by PG-13 movie ratings.” The update automatically places users under 18 into a “13+” setting, with an optional “Limited Content” tier for stricter parental controls.

Meta said the PG-13 reference was meant to help parents understand the platform’s safety goals in familiar terms, not to suggest an official partnership with the MPA.

Still, the association pushed back hard, arguing that Meta’s reliance on AI and automation in classifying content bears no resemblance to its human-led, context-based rating system.

Why it matters now

The tension between Meta and the MPA is more than just a legal scuffle over branding. It reflects a broader clash between legacy media institutions and AI-powered platforms over who defines what’s appropriate for younger audiences.

The MPA emphasized that its ratings process involves a consensus from a group of independent parents, while Meta’s approach is algorithmically driven. That difference could set a precedent for how content classification is governed across industries and how much leeway tech platforms have in adapting well-known standards.

It also raises red flags around content labeling integrity, especially as AI plays a bigger role in moderation. Marketers navigating family-friendly or teen-oriented content now need to think twice about the terms they use to describe it.

What marketers should know

Here’s how this legal dust-up affects your marketing strategy and what you can do about it.

1. Be cautious with industry-standard labels

Don’t assume that popular cultural terms like “PG-13” or “family safe” are fair game. These phrases can carry legal weight or implied partnerships. When in doubt, create custom descriptors that reflect your platform or campaign’s actual safeguards.

2. Watch for evolving brand safety frameworks

Platforms like Instagram are shifting to AI-first moderation models, but that doesn’t mean regulators and industry watchdogs are comfortable with it. Marketers should follow how classification systems evolve and check that their own content policies align with platform updates and legal definitions.

3. Build clarity into your parental messaging

If your product, service, or campaign targets families or younger audiences, make sure your messaging is specific, transparent, and legally sound. Generic terms can invite confusion or, worse, legal scrutiny.

Meta’s update reflects a larger industry shift toward automated teen safety features. Brands should assess whether their content or influencer strategies still reach the intended age groups, especially as AI curates more of the online experience.

This clash between Meta and the MPA is a cautionary tale for any marketer working near the edges of youth content, family audiences, or regulatory language. As platform policies tighten and automation expands, the margin for error in brand messaging keeps shrinking.

Marketers don’t need to avoid safety claims, but they do need to back them up with clarity, accuracy, and a good understanding of the legal and cultural baggage certain terms carry. What starts as a helpful metaphor for parents could quickly turn into a reputational misstep if not handled carefully.

This article is created by humans with AI assistance, powered by ContentGrow. Ready to explore full-service content solutions starting at $2,000/month? Book a discovery call today.

Search

RECENT PRESS RELEASES

Related Post