Meta Platforms Metamorphizing Into An AI Cloud For Sovereigns

January 23, 2026

Meta Platforms is not happy just being the social network for the world anymore. It’s aspirations, driven by the GenAI Boom, are much, much larger. So much so that the company has to figure out a way to work with the national governments of the world to become a player without actually being a provider of cloud services, which is how (in alphabetical order) Alibaba, Amazon Web Services, Baidu, Google, Microsoft Azure, and Tencent have forged natural alliances as the practicality and necessity of sovereign AI clouds has sunk in.

If national security is created or enhanced or dependent upon AI, then the infrastructure it relies upon has to be subject to the needs of national governments. This seems obvious once you say it out loud.

But, Meta Platforms is not a cloud, even though its recent venturing into selling API capacity for its Llama large language models was the first example of it trying to be a platform cloud (PaaS) or software cloud (SaaS), depending on how you want to draw the lines. We do not think that Meta Platforms has the desire to be an infrastructure cloud (IaaS) as the six other companies mentioned above are, but stranger things have happened.

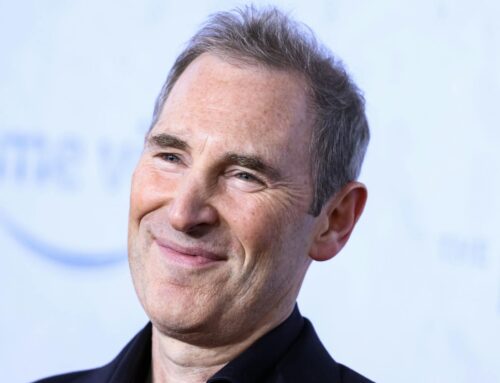

What Meta Platforms does want to do, however, is get its infrastructure and balance sheet act together as it deploys tens of gigawatts of AI capacity in the next several years along its goal of deploying hundreds of gigawatts out into the future. Each gigawatt of capacity is somewhere between $40 billion and $60 billion, depending on what it is and where it is, so this is an absolutely enormous amount of money that Meta Platforms co-founder and chief executive officer Mark Zuckerberg has talked about in posts on Threads this month.

“How we engineer, invest, and partner to build this infrastructure will become a strategic advantage,” Zuckerberg said in his post, with the goal of providing “personal superintelligence to billions of people around the world.”

And thus, Zuckerberg has created a new “top-level” initiative called Meta Compute. No, this is not the new name of the Facebook Engineering blog that we all got to know so well over the past decade, but a political and technical organization that will help Zuck & Co work with sovereign governments and sovereign wealth funds to provide compute, storage, and networking for what we presume will be a mix of open source and closed source LLMs. Last July, as the company was having difficulties getting its Llama 4 Behemoth model, which has over 2 trillion parameters across its hundreds of experts and which can have 16 experts active at any time with 288 billion active parameters, to work right, there were rumors going around that Meta Platforms would shift some of its models to closed source.

But it may just turn out that the company will allow other closed source models to run inside of its datacenters for itself and at the behest of sovereigns that it partners with. Or do both as part of the acquihire of the key team from Scale AI and its $14.8 billion investment (49 percent stake) in that AI model startup headed by Alexandr Wang, who is now chief AI officer at Meta Platforms. Scale AI has made a business out of making Llama and Claude models from Anthropic consumable by and supportable for enterprises as well as providing tools to knock datasets into shape so they can feed into AI training runs.

Wang’s team brought over from Scale AI is one of the kernels of the Superintelligence Lab that Zuck & Co have spent untold billions of dollars funding in the past year, bringing in fresh AI research talent and counterbalancing the loss of Yann LeCun, the long-time leader of the Facebook AI Research lab at New York University and one of the key researchers behind the development of the convolutional neural network more than four decades ago. (LeCun left FAIR a few weeks ago to start Advanced Machine Intelligence Labs, and has made no secret that he does not think the future of AI is GenAI LLMs.)

So that is the software that Meta Platforms wants to run in its AI datacenters. But the what that will go into those datacenters, and the money to build the iron and the datacenters, is now the job of the new Meta Compute organization.

Trying to get the infrastructure ropes to all pull in the same direction will be the task of Dina Powell McCormick, who is now president and vice chairman of the company. Technically, that makes her second in command at the social network and AI powerhouse wannabe, but titles don’t always confer power. The titles are meant to convey the idea that without an interface with the sovereigns of the world (nations, wealth funds, private equity collectives, whatever), and someone with political connections in the Trump Bush administrations.

Powell McCormick attended the University of Texas at Austin, and helped pay for college by working as a legislative assistant for two Republicans in the Texas state senate. She joined George W. Bush’s first term, and was eventually elevated to a senior White House staff job managing the personnel the president hires for his cabinet as well as for ambassadors. She was eventually tapped to be Assistant Secretary of State for educational and cultural affairs. She joined investment bank Goldman Sachs in 2008 and made partner in 2010; she ran the company’s foundation, among other things. In 2017, Powell McCormick joined the first Trump administration, first as a senior advisor that had her managing a $200 billion arms deal with Saudi Arabia, and later as deputy National Security Advisor for strategy. Powell McCormick returned to Goldman Sachs in early 2018, eventually being put in charge of the bank’s Sovereign Fund – meaning, it’s management of sovereign wealth funds – and helped take Saudi Aramco public in 2019. More recently, since 2023 she has been a partner at merchant bank BDT & MSD Partners. Yes, that MSD in MSD Partners is – you guessed it – Michael S Dell, the famous founder of the infrastructure peddler located in Austin. (BDT is for Byron Trott, who was vice chairman for investment banking at Goldman Sachs.

To say that Powell is connected to money and power is an understatement. And Zuck & Co know that all of its aspirations going to need to be funded by more than its own advertising stream. Meta Platforms could post $200 billion in sales for 2025. The company will have around $45 billion cash and will have brought in something like $75 billion in net earnings for 2025, so this is a very profitable business. But clearly, those hundreds of gigawatts of AI datacenters will cost trillions of dollars, and that means that Meta Platforms has to make money from AI models from third parties and it can do that by borrowing money from them to build datacenters that run those models for a fee.

To do that profitably – or at least less costly – Meta Platforms is going to have to take the same holistic approach that has always underpinned the Open Compute Project: It is going to have to advance the state of the art in systems and datacenter designs, and generate tokens and chew on tokens for less money than its hyperscaler and cloud builder peers. The ongoing efforts to create inference and we think training MTIA accelerators and the acquisition of RISC-V CPU and GPU designer Rivos last fall are part of the Meta Compute picture that has to be brought into tighter focus. You can’t pay Nvidia its margins and be a low cost token consumer or peddler. You might not even be able to pay AMD its much lower margins and be in the token business.

Hence, the desire by OpenAI as well as the cloud builders and model builders to create their own CPUs and XPUs in the fullness of time. This is a huge undertaking, particularly when the nature of AI processing is changing so fast.

Daniel Gross, who created a search engine called Cue that was acquired by Apple in 2013 and who has been investing in AI startups like Perplexity AI and CoreWeave and other upstarts like Uber and GitHub since 2017 as well as being a co-founder of Safe Superintelligence Inc, joined the Superintelligence Lab at Meta Platforms last July. Oddly enough, Zuck has put Gross in charge of a new group at the company that will be responsible for “long-term capacity strategy, supplier partnerships, industry analysis, planning, and business modeling.”

Santosh Janardhan, who has been head of global infrastructure and co-head of engineering at Meta Platforms for nearly three years, retains that role and as Zuck put it “will continue to lead our technical architecture, software stack, silicon program, developer productivity, and building and operating our global datacenter fleet and network.” Janardhan was a database and storage manager at PayPal, eBay, Google, and YouTube before joining Facebook in October 2009 to do the same job. Over the past 16 years, Janardhan has risen through the infrastructure engineering ranks.

We have said from the beginning that Facebook and now Meta Platforms is the company that could most easily create its own compute engines because it does not have an IaaS business it needs to peddle. It is a wonder that the company has not been more successful in creating custom silicon. At the growing volumes that Meta Platforms is pondering for the rest of the decade and beyond, the economics of homegrown chips are increasingly attractive. It all comes down to building the team, or partnering to get semi-custom iron.

Perhaps the most important thing that Zuckerberg has with these three people in their positions is someone to blame when things go wrong, which every CEO needs. Zuck also has someone to credit when things go right, too, which every CEO needs as well. No matter what, Zuck is in charge. He has around 14 percent of outstanding shares in the company, but 61 percent of voting shares.

Search

RECENT PRESS RELEASES

Related Post