Meta’s election strategy: Misinformation, AI, and ad transparency

March 18, 2025

As Australians prepare to vote in the upcoming federal election, Meta has outlined its strategy to ensure platform integrity and combat misinformation across Facebook, Instagram, and Threads.

Cheryl Seeto, Meta’s head of policy in Australia, said the company understands its role as a major platform for public discourse and is taking that responsibility seriously.

“Our election integrity approach has been shaped by learnings from past elections globally, including in the US, India, the EU, Brazil, and Indonesia in 2024. We’re applying those lessons here in Australia.

“Our focus is on providing a platform for people to express their opinions and engage in public discourse while reducing the spread of misinformation and connecting people with reliable election information,” Seeto said.

Cheryl Seeto, Meta’s head of policy in Australia

Fact-checking and media literacy initiatives

Meta will continue its partnership with Agence France-Presse (AFP) and the Australian Associated Press (AAP) as part of its third-party fact-checking program. Any content debunked by these organisations will be labelled with warnings and down-ranked to limit its reach across Meta’s platforms.

In addition, Meta is collaborating with AAP on a media literacy campaign to help Australians critically assess online content. This initiative will run in the lead-up to the election to further combat misleading information.

Meta’s Community Standards will also remain in force, ensuring the removal of content that could incite violence, interfere with voting, or pose other serious risks.

Collaboration with the Australian Electoral Commission

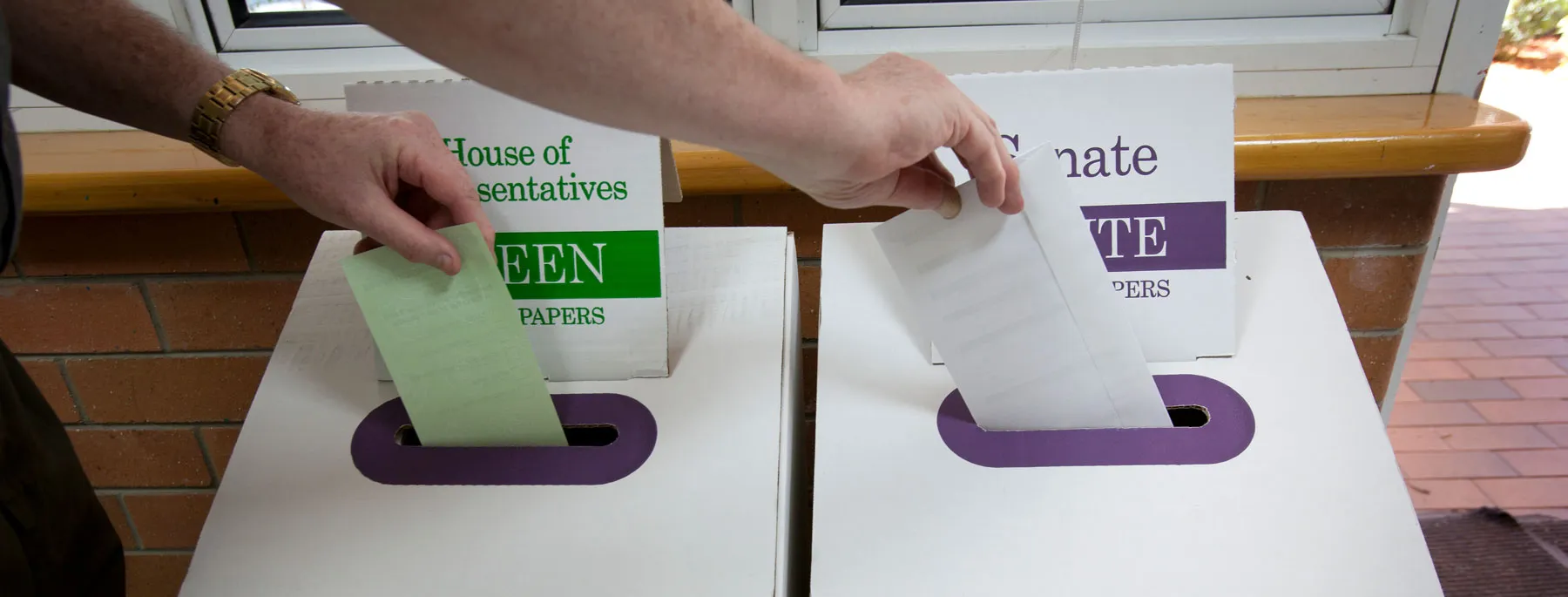

To encourage voter participation, Meta is working with the Australian Electoral Commission (AEC) to deliver in-app notifications across Facebook and Instagram.

These prompts will begin one week before the election, directing Australians to official AEC voting information. On election day, Meta will roll out a reminder notification, reinforcing engagement with verified electoral updates.

Seeto said the platform is also working with the AEC to respond to voter questions on Meta’s platforms with authoritative information and official links.

Additionally, Instagram voting stickers will be available, allowing users to share their participation via Stories.

Managing AI-generated content in political ads

Meta says all AI-generated content flagged by independent fact-checkers will be labelled “altered” and down-ranked to limit its reach.

A new rule will also require advertisers to disclose when AI or other digital techniques are used to create or alter political ads in specific ways.

While concerns over generative AI’s impact on elections remain high, Seeto stated that in previous elections, the risks “did not materialise in a significant way” and that “while there were instances of confirmed or suspected AI use, the volumes remained low. Our existing policies and processes proved sufficient to reduce the risk around generative AI content during the election period.”

Seeto clarified that these ad policies apply not just to election-related ads, but also to social issue ads covering topics such as human rights, child exploitation, and climate change.

“If we find that an advertiser has not disclosed AI use where required, we will reject the ad, and repeat offences will result in penalties,” she added.

Meta will also label photorealistic AI-generated images in both organic posts and paid ads to enhance transparency. This applies to AI-generated content from Meta AI, Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock, ensuring users can distinguish between real and manipulated content.

This policy applies to ads featuring:

• Digitally altered depictions of real individuals saying or doing things they did not actually say or do.

• AI-generated people or events that never happened.

• Manipulated footage of real events.

Users posting AI-generated content will be encouraged to self-disclose, and Meta will apply labels accordingly. If AI-generated content poses a high risk of deceiving the public on critical issues, Meta may apply more prominent warning labels.

Despite these precautions, Seeto reiterated that AI’s role in past elections has been relatively minor.

“Ratings on AI content related to elections, politics, and social issues represented less than 1% of all fact-checked misinformation,” she said.

Meta has also signed onto the 2024 Tech Accord to Combat Deceptive AI Content and is collaborating with the Partnership on AI to establish industry-wide standards for mitigating the risks of generative AI.

Countering election and voter interference

Meta says it is ramping up efforts to prevent foreign and domestic election interference. The company has built specialised global teams to detect and remove covert influence operations, having dismantled over 200 deceptive networks since 2017.

Additionally, Meta continues to label state-controlled media on Facebook, Instagram, and Threads, ensuring users can identify content from government-influenced publications.

Meta is also reviewing and updating its election-related policies to tackle:

• Coordinated harm

• Voter interference

• Hate speech

• Election-related misinformation (both human- and AI-generated)

These enforcement measures will apply to all content types, ensuring a consistent and adaptive response to emerging election threats.

Scaling up efforts to combat scams

Seeto also highlighted Meta’s ongoing efforts to combat scams, particularly fraudulent ads featuring politicians.

“We’ve significantly scaled up our work tackling scams over the past year, especially those exploiting public figures. Any content that violates our fraud and scam policies is removed as soon as we detect it,” she said.

Meta is also working closely with the National Anti-Scam Centre, allowing government agencies to report scams that may have slipped through Meta’s detection systems.

“This enables us to investigate further, identify wider scam networks, and enforce actions at scale,” Seeto added.

Search

RECENT PRESS RELEASES

Related Post