The Next Frontier of Super Intelligence: Google, Meta, NVIDIA… Tech Giants Are Doubling

September 29, 2025

AI giants such as Google DeepMind, Meta, and NVIDIA are shifting their R&D focus toward “world models” in an effort to gain a competitive edge in the race toward machine “superintelligence.” World models learn from video and robotics data to comprehend the physical world, offering broad application potential. According to a senior executive at NVIDIA, the potential market size could reach up to $100 trillion, encompassing sectors like autonomous driving, robotics, and manufacturing.

As advancements in large language model technology begin to slow, a new AI race centered around “world models” is quietly unfolding among tech giants. This trend marks a potential shift in the focus of competition within the AI field, moving from language to the understanding and simulation of the physical world.

According to a report by the Financial Times on September 29, companies such as Google DeepMind, Meta, and NVIDIA are striving to take the lead by developing a new type of system. These systems no longer rely solely on text but instead learn from video and robotic data to understand and navigate the physical world.

“World models” are seen as a key step in advancing autonomous driving, robotics, and so-called “AI agents,” but their training also faces significant challenges in terms of data and computational power.

Simulating the Physical World: Latest Technological Breakthroughs

In recent months, several AI companies have successively announced progress in the field of “world models,” highlighting the growing momentum in this area.

Google DeepMind released Genie 3 last month, a model capable of generating video frame by frame while considering past interactions, changing the traditional approach of generating entire videos at once. Shlomi Fruchter, co-lead of the Genie 3 project, stated that by constructing environments that simulate the real world, AI can be trained in a more scalable manner without “bearing the consequences of mistakes made in the real world.”

Meta is attempting to mimic the way children passively learn by observing the world, using raw video content to train its V-JEPA model. The Facebook Artificial Intelligence Research Lab (FAIR), led by Meta’s Chief AI Scientist Yann LeCun, released the second version of this model in June and has begun testing it on robots.

Meanwhile, Jensen Huang, CEO of chip giant NVIDIA, asserted that the company’s next major growth phase will come from “physical AI,” with these new models set to revolutionize the field of robotics. NVIDIA is leveraging its Omniverse platform to create and run such simulations to support its expansion into the robotics sector.

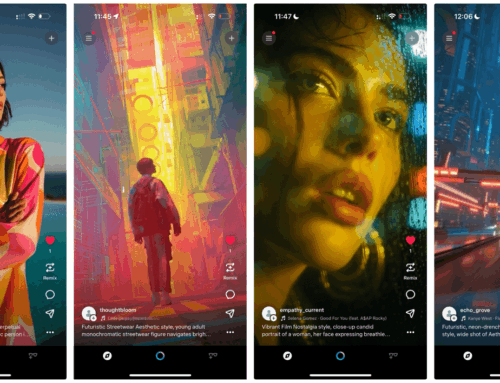

One of the recent applications of the “world model” is in the entertainment industry. World Labs, a startup founded by AI pioneer Fei-Fei Li, is developing a model that can generate 3D environments akin to video games from a single image.

Runway, a video generation startup, also launched a product last month that utilizes “world models” to create gaming scenarios. Its CEO, Cristóbal Valenzuela, pointed out that compared to previous models, the “world model” system demonstrates a better understanding and reasoning of physical laws within scenes.

Why are giants betting on this new track?

A core driver behind tech giants turning their attention to “world models” lies in the widespread belief within the industry that large language models (LLMs) are reaching their capability ceiling.

Despite significant investments from various companies, the performance leaps of next-generation LLMs released by institutions such as OpenAI, Google, and Elon Musk’s xAI have started to slow down.

Yann LeCun, Meta’s Chief AI Scientist and one of the figures regarded as a founding father of modern AI, has consistently warned that LLMs will never achieve human-like reasoning and planning capabilities.

However, constructing these models requires collecting massive amounts of data about the physical world and immense computational power, which remains a significant technical challenge yet to be overcome. Nevertheless, companies like NVIDIA and Niantic are attempting to fill the data gap by generating or predicting environments through models.

Despite the promising outlook, the path toward mature “world models” remains long. Figures like Yann LeCun of Meta believe it may take another decade to realize machines with human-level intelligence powered by next-generation AI systems.

Editor: Jayden

Search

RECENT PRESS RELEASES

Related Post